Summary: Nvidia's graphics cards are familiar to game masters, and they are gradually integrated into our daily lives.

Nvidia’s graphics cards are familiar to game masters, and are gradually integrated into our daily lives. Recently, the world's first "ray tracing" GPU "Quadro RTX GPU" was launched, and Huang Renxun, the founder and CEO of NVIDIA, called "the most important GPU since CUDA." Nvidia has been polishing this graphics card for ten years. Its appearance will subvert the existing graphics rendering calculations.

We are honored to invite Mr. Liwei Zhao, Head of Architecture of Nvidia Asia Pacific Region. He had a profound exchange with more than 20 entrepreneurs around the topic of "Frontier Technology Progress in GPU Computing and Its Application in the Field of AI" and moved bricks in the field of artificial intelligence. Men and women, hurry up and watch it~

1. The latest technological progress of GPU computing

Zhao Liwei: I am very fortunate to have experienced the entire development process of IT from its inception to its popularity in the past 20 years. Twenty years ago, I was at IBM. At that time, I didn't own a PC, and all emails ran on the mainframe. I have a floppy disk, which is equivalent to my key. Insert the floppy disk into one of the many computers in the office, and I can access my mail service on the host. This can be said to be the pre-PC era.

In the past few years, the computing form has changed from PC to mobile computing to cloud to artificial intelligence. But in fact, AI did not start research in the past two years, it started decades ago. So why is artificial intelligence so hot now? It is inseparable from the development of three calculations. What is three calculations? That is, algorithm, computing power (computing power), calculation data (data).

Source: http://news.ikanchai.com/2017/1204/179891.shtml

So how do these three counts interact to drive artificial intelligence from 2012 to its current state? Here is a short story to share with you.

Everyone may have heard of Alex Krizhevsky, who designed the first true deep neural network AlexNet in human history during his Ph.D.-a total of eight learning layers, containing 60 million parameters. His mentor Hinton (known as the "father of neural networks") did not support his use of this as a research direction for his doctoral dissertation, because the calculations at the time were based on CPU calculations. Such a neural network model requires several trainings at a time. Months; then you have to manually adjust the parameters, and then re-train. Repeatedly, to get a reliable neural network model, it takes about dozens of times; when you are lucky, it may cost you more than a dozen times to train Decades of time. But Alex, as a typical Geek, does not give up. In addition to studying mathematics, he also learned a lot of programming-related knowledge, including CUDA.

CUDA is a parallel computing platform and programming model created by NVIDIA. It utilizes graphics processing unit (GPU) capabilities to achieve a significant increase in computing performance. NVIDIA launched CUDA in 2006. Since then, its stock price has risen from the initial $7 to more than $260 now.

Alex used CUDA to reprogram his model, and then bought two GTX580 graphics cards that were very powerful at the time. It took 6 days to train AlexNet, and it was constantly tuned and improved. Later, he participated in the ImageNet competition led by Li Feifei and won the championship of that year. The image recognition accuracy that AlexNet was able to achieve at the time was far ahead of the second place. After the competition, Alex and his mentor Hinton set up a company, which was acquired by Google for $400 million a few months later. This is a story of creating wealth by GPU. It can be seen that the first combination of GPU and deep neural network has created a value of 400 million US dollars.

NVIDIA® GeForce® GTX 580

After this, we have experienced a great explosion of neural network models similar to the Cambrian period. Before 2012, although people have been researching, there is not enough computing power to support these algorithms. However, the emergence of new computing methods GPU Computing has supported the training of the same type of neural network model; this has led to the explosion of various models. And enter the era of artificial intelligence.

Today, you can use Caffe, TensorFlow, Theano, and other open source deep learning platforms to implement your own algorithms, or you can program on CUDA. Leading companies in the field of artificial intelligence research. The algorithm models they now recommend have reached a very complex level. A model can reach a scale of 1 T or even several Ts, containing billions or even tens of billions of parameters, and the amount of data. It is even more predictable. Such a model becomes more difficult to train. Therefore, the three calculations are entangled in this way, promoting each other and improving each other.

Everyone knows the famous Moore's Law, which is that when the price remains the same, the number of components that can be accommodated on an integrated circuit will double about every 18-24 months, and the performance will also be doubled. In other words, the computer performance that can be bought for every dollar will more than double every 18-24 months. This law reveals the speed of information technology progress. However, according to OPEN AI's calculations at the beginning of this year, from the emergence of AlexNet, to the end of last year, a total of about 5 years, at the level of artificial intelligence model training, our demand for computing power has increased by 300,000 times.

We all know that in the first 25 years of Moore's Law, performance has been improved by 10 times in 5 years and 100,000 times in 25 years. This is the increase in computing power brought to us by Moore's Law in the CPU era. But this is far from enough for the computing power demand of artificial intelligence models. Therefore, in order to meet this demand for computing power, we continue to polish our technology at the GPU level to improve performance in all aspects. On this basis, we have also seen more and more people begin to program and train their own models based on CUDA, and Google, Facebook, etc. are also building their own open source deep learning platforms based on CUDA.

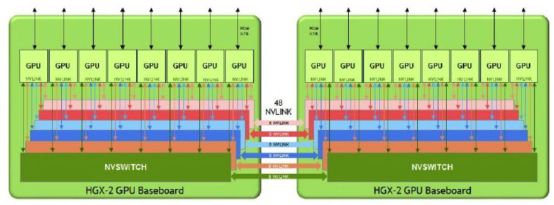

NVIDIA launched the HGX-2 platform and the DGX-2 server based on HGX-2 at the GPU Technology Conference in March 2018. It is an electronic product with high density, strong performance and excellent thermal performance. The core of the DGX-2 architecture is the NVSwitch memory structure. In essence, the NVSwitch structure creates a huge 512 GB shared memory space for GPU nodes. With a power consumption of 10 kilowatts, it can reach nearly 2 Petaflops of computing power on TensorCore.

Block diagram of the embedded NVSwitch topology of the HGX-2 platform (Source: NextPlatform)

The so-called GPU Computing is not a matter of hardware alone. How to apply these computing power to artificial intelligence algorithms and actual application scenarios is the focus of most people's attention. Everyone mentioned that Nvidia may think it is a chip company, but in fact, there are about 12,000 people in our company worldwide; 11,000 of them are engineers. Among these engineers, 7,000 are software engineers. They Work together to build and improve the artificial intelligence ecosystem based on GPU Computing.

At present, the application scenarios of artificial intelligence are more concentrated in Consumer Internet, represented by BATJ and TMD in China, and Fangjia, Apple, Microsoft, and Netflix in the United States. These companies are the first batch of pioneers in the field of artificial intelligence. They have invested a lot of money in this field, accumulated a lot of computing power, and recruited the most famous doctors in the industry to their companies. Each of their services is available every day. 100 million usage (DAU, Daily Active User), so a lot of data is collected. At the 2018 Create Baidu Developer Conference, Robin Li mentioned the concept of Intelligent Chasm, which can be understood as an intelligent gap. He said that compared with the computing power and data accumulated by these leading companies, the computing power of all other companies in the world adds up It may be the same scale as theirs, or even worse. This gap between computing power and data is like a ditch.

So how to make these seemingly superior artificial intelligence algorithms, relatively expensive computing power, and difficult-to-obtain data easier, this is what we have done in the past and will be done in the next time .

Take TensorRT as an example. NVIDIA TensorRT is a high-performance neural network inference (Inference) engine that is used to deploy deep learning applications in a production environment. The applications include image classification, segmentation, and target detection, which can provide maximum inference throughput. And efficiency. TensorRT is the first programmable inference accelerator that can accelerate existing and future network architectures. With the substantial acceleration of TensorRT, service providers can deploy these computationally intensive artificial intelligence workloads at an affordable cost.

2. AI industry case sharing

In addition to the Internet, the more commonly used application scenarios of artificial intelligence include autonomous driving, medical treatment, telecommunications, and so on.

1. Recommendation Engine

In the past, people were looking for information, but now it has been transformed into information looking for people. You may have used small video apps like Kuaishou or Douyin. Behind these small videos are neural network algorithms. While you are using a recommendation engine, there may be dozens of models evaluating you. Five years ago, it may only be sensing and perceiving your needs. Now it is evaluating you from various dimensions, balanced in many aspects, not just to Attracting clicks requires you to stay long enough; and the algorithms for attracting clicks and attracting stays are quite different.

Almost all major Internet companies in China are training their own recommendation models to achieve thousands of people. Recommendations are very important for these companies, because Internet monetization is almost all related to recommendations. Needless to say, e-commerce categories such as domestic Kuaishou and Douyin, foreign Netflix and Hulu, and information categories such as Google news and Toutiao. There are also music, social and so on. The use of users is also providing new data to the company, which can be used to train more effective models. On the one hand, this improves the user experience, but on the other hand, it may cause users to be unable to leave these products.

2. Medical

Among the members of NVIDIA’s Start-up Acceleration Program, a large part of them are artificial intelligence + medical projects. One of the major challenges of medical projects is diagnosis. At present, it is still difficult to diagnose through deep learning, but the market is still large. According to the data in some related reports, for some chronic disease diagnosis, the use of deep learning algorithms for assistance can increase the accuracy rate by 30%-40%, while reducing the cost by half.

Take retinal scanning as an example. People often say that the eye is the window of the soul. In fact, the eye is also the window of the body. The retina of the human eye is rich in capillaries. By scanning the retina, some physical problems can be detected, such as the secondary disaster of diabetes. One is the disease of the retina and cardiovascular disease.

In China, there are relatively few doctors who can diagnose through retinal scanning; and in China, some doctors cannot make a diagnosis. Through deep learning technology, the experience of these doctors can be collected to assist in diagnosis. At present, this technology is still difficult to apply to hospitals, but some insurance companies are very willing to use this technology to obtain some information about the probability of a customer’s illness, thereby assisting in the formulation of insurance policy amounts.

3. Autonomous driving

In order to carry out the research and development of autonomous driving, NVIDIA has its own server farm. There are 1000 DGX-1s in this serverfarm, with 1 E (1E=1024P=1024*1024T) floating-point computing capability, which is used for the training of autonomous driving models. A car running outside for a day will generate a data volume of T, and a year may be a data volume of P. But even so, it is far from enough to collect data on real vehicles. According to estimates, self-driving vehicles have to run at least 100,000 miles to barely meet the road requirements. For now, the disengagement rate of self-driving vehicles is not high. Google’s self-driving vehicles need to hold the steering wheel once for thousands of miles, and the other conditions are basically the same.

Our current approach is to take the model from the real car to the server farm, let him train in the highly simulated simulation environment in the server, generate new data during the training process, and then use the data to train new ones. Model. In this way, we try to accelerate the training of autonomous vehicle models.

Image source: pixabay.com

After sharing the AI ​​application scenarios, the speaker Zhao Liwei also introduced NVIDIA's new product Quadro RTX in detail, which can help the game and film industries to achieve real-time ray tracing and rendering. Finally, he ended with Nvidia's new office buildings in Silicon Valley "Endeavor" and "Voyager", expressing Nvidia's continuous efforts in the field of artificial intelligence and expecting artificial intelligence technology to lead mankind into the unknown.

The ship power plant is the power equipment set up to ensure the normal operation of the ship. It provides various energy for the ship and uses the energy to ensure the normal navigation of the ship, the normal life of the personnel, and the completion of various operations. Ship power plant is all the machinery and equipment for the generation, transmission and consumption of various energy, and it is an important part of the ship. Ship power plant includes three main parts: main power plant, auxiliary power plant, other auxiliary machinery and equipment.

marine device,Manual hydraulic valve remote control device

Taizhou Jiabo Instrument Technology Co., Ltd. , https://www.taizhoujiabo.com