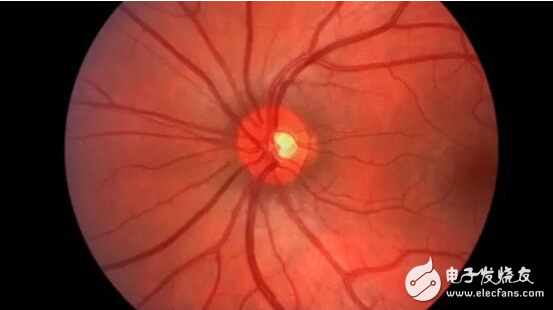

Google's DeepMind has developed an artificial intelligence that diagnoses disease by analyzing medical images, which may be the first important application of artificial intelligence in the medical field.

London-based DeepMind has analyzed thousands of retinal scan data to train an artificial intelligence algorithm that detects symptoms of eye diseases more quickly and effectively than human experts.

After working with the National Health Service of the United Kingdom and the Moorefields Eye Hospital in London for two years, showing "promising signs", the results were published in a medical journal. Moorfields is one of the most famous eye hospitals in the world. If the results are peer-reviewed, the technology can enter clinical trials within a few years.

Dominic King, clinical director of DeepMind Health, told the Financial Times: "In a specific area like medical imaging, you can see that we will make great progress with artificial intelligence in the next few years." "Machine learning may be better than The stage plays a more important role, dealing with issues that are more sensitive and more specific."

DeepMind's algorithm has been trained using anonymous 3D retinal scans provided by Moore Fields and is strictly labeled as a sign of disease by a physician. The company has now begun to discuss clinical trials with hospitals including Moore Fields.

Since the image provides rich data information of millions of pixels, the algorithm can learn to analyze the three most serious eye diseases: glaucoma, diabetic retinopathy, and signs of age-related macular degeneration.

“We are optimistic about the results of this research, which will benefit people around the world and help eliminate visual barriers that can be avoided,†said Peng Tee Khaw, R&D Director of Moore Fields. “We hope to be peer-reviewed next year. Published our research in the journal."

According to Dr. King, artificial intelligence is "universal," meaning it can be applied to other types of images. DeepMind said that the next phase will be to train algorithms for analyzing radiation scans and mammograms in collaboration with University Hospital London and Imperial College London.

Labeling head and neck cancer "is a five- or six-hour job, usually when a doctor sits down after work." A consultant marked the scan at Google's office. “The emergence of artificial intelligence happens to be at a time when the UK’s national health care system is under tremendous pressure.â€

As the population grows and the population ages, the pressure on the health system is growing, and hospitals around the world are beginning to discuss whether artificial intelligence can reduce the burden of repetitive work. DeepMind employs 100 people in its health team, compared to 10 people three years ago.

However, the relationship between large technology companies and hospitals is sensitive. Last year, the UK data protection oversight agency ruled that the NHS Trust violated the law by providing DeepMind with a medical record of 1.6 million patients.

The ruling involved a trial of the DeepMind Streams medical diagnostics application, which did not use AI but analyzed the data and sent alerts to nurses and doctors when patient readings were abnormal.

The company has set up a research organization that focuses on the ethical and social impact of the AI ​​it creates.

Dr. King said, “Artificial intelligence needs to be implemented and evaluated. Strictly speaking, it can be used as a new medical medical device, so you have to prove that it will allow you to expand to the entire health system.â€

Optical Rotary Sensor,Custom Encoder,Optical Encoder 6Mm Shaft,Handwheel Pulse Generator

Jilin Lander Intelligent Technology Co., Ltd , https://www.landerintelligent.com