The evolution of the brain has been going on for a long time, from the worm brain 500 million years ago to the modern structures of today. For example, the human brain can perform a variety of activities, many of which are effortless. For example, it is insignificant for us to distinguish whether a visual scene contains animals or buildings. In order to perform these activities, artificial neural networks require experts to design carefully after years of difficult research, and often need to deal with a specific task, such as looking up the content in the photo, called genetic variation, or helping to diagnose the disease. Ideally, one would want an automated way to generate the right architecture for any given task.

If the neural network is to accomplish this task, it will require careful design by experts after years of research to solve a specific task, such as discovering objects in photos, discovering genetic variations, or helping to diagnose diseases. Ideally, people want an automated way to generate the right network structure for any given task.

One way to generate these network structures is by using an evolutionary algorithm. Traditional topological research has laid the foundation for this task, enabling us to apply these algorithms on a large scale today, and many research teams are working on this topic, including OpenAI, Uber Labs, Sentient Labs, and DeepMind. Of course, Google’s brain has been thinking about Automated Learning (AutoML).

In addition to learning-based methods (such as reinforcement learning), we want to know if we can use our computing resources to program the evolution of image classifiers on an unprecedented scale. Can we reach a solution with the least amount of experts involved, how good can today's artificial evolutionary neural networks be? We solve these problems through two papers.

In the "Large Evolution of Image Classifiers" published on ICML 2017, we established an evolutionary process with simple building blocks and initial conditions. The idea is simply to start from scratch and let the evolution of scale do the construction work. Starting with a very simple network, the process found that the classifier was comparable to the model that was manually designed at the time. This is encouraging because many applications may require very few users to participate.

For example, some users may need a better model, but may not have time to become a machine learning expert. The next natural question to consider is whether the combination of manual design and evolution can do better than either method alone. Therefore, in our recent paper "Regularization Evolution of Image Classifier Architecture Search" (2018), we participated in this process by providing complex building blocks and good initial conditions (discussed below). Moreover, we use Google's new TPUv2 chip to expand the scope of computing. The combination of modern hardware, expert knowledge and evolution has produced the latest models of the popular CIFAR-10 and ImageNet image classification benchmarks.

An easy way

The following is an experimental example of our first paper.

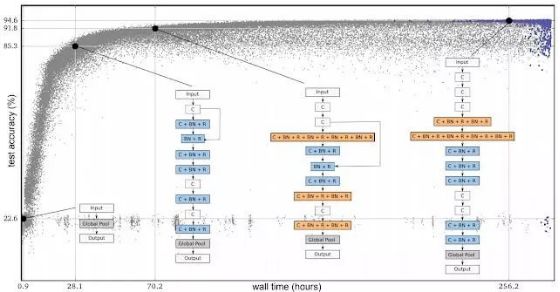

In the figure below, each point is a neural network trained on the CIFAR-10 dataset and is typically used to train image classifiers. Each point is a neural network that is trained on a common image classification data set (CIRAR-10). Initially, the population consisted of 1000 identical simple seed models (no hidden layers). Starting with a simple seed model is very important. If we start with a high-quality model with initial knowledge and expert knowledge, it will be easier to get a high-quality model. Once started with a simple model, the process progresses gradually. At each step, a pair of neural networks are randomly selected. Select a higher-precision network as a parent class, and generate child nodes by copying and mutating, then add them to the group, and the other neural network will disappear. All other networks remain unchanged during this step. As many of these steps are applied one after another, the entire network will behave like human evolution.

Evolutionary experimental process. Each point represents an element in the population. These four lists are examples of discovery architectures that correspond to the best individuals (the rightmost, based on validation accuracy) and their three ancestors.

In summary, although we minimize the involvement of processing researchers through a simple initial architecture and intuitive mutations, a large amount of expert knowledge has entered the building blocks that build these architectures. Some of these include important inventions such as convolution, ReLUs, and normalized layers of batch processing. We are developing an architecture consisting of these components. The term "architecture" is not accidental: it is similar to building a high quality brick house.

Combining evolution and manual design

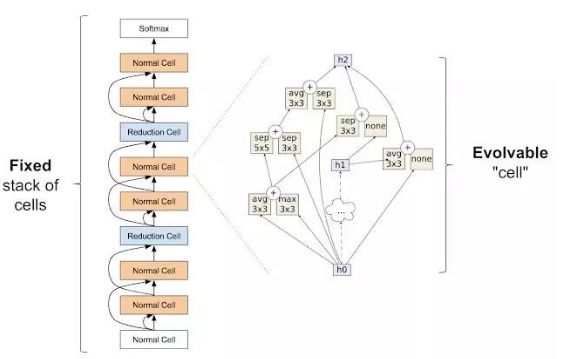

After our first paper, we hope to reduce the search space and make it easier to manage by providing fewer choices to the algorithm. Using our architectural derivation, we removed all possible ways to make large-scale errors from the search space, such as building a house, and we removed the possibility of placing the wall on the roof. Similar to neural network structure search, by repairing the large-scale structure of the network, we can help the algorithm solve the problem. So how do you do this? Zoph et al. introduced an initial module for architectural search. It has proven to be very powerful. Their idea is to have a bunch of repeating units called cells. The stack is fixed, but the architecture of each module can be changed.

The building block introduced in Zophet al. The left side of the figure shows the entire neural network versus external structure, which parses the input data from the bottom to the top through repeated units. The internal structure of the cell on the right. The purpose of this experiment is to find a unit that can generate high-precision networks in batches.

In our second paper, "Regularization Evolution of Image Classifier Architecture Search" (2018), we present the results of applying evolutionary algorithms to the above search space. Mutations modify cells by randomly reconnecting inputs (right arrow in the figure) or random replacement operations (for example, they can replace the "maximum 3x3" pixel block in the figure). These mutations are relatively simple, but the initial conditions are not the same: the current whole can already be initialized with models that must conform to the cellular structure designed by experts.

Although the elements in these seed models are random, we no longer start with simple models, which makes it easier to finally obtain high quality models. If the contribution of the evolutionary algorithm makes sense, then the final network should be much better than the network we already know can be built in this search space. Our papers show that evolution can indeed find the most advanced models that match manual design or go beyond craftsmanship.

Control variable comparison

Even if the mutation/selection evolution process is not complicated, perhaps a more straightforward approach (such as random search) can do this. Other options are not simple, but they also exist in the literature (such as reinforcement learning). Because of this, the main purpose of our second paper is to provide a comparison of control variables between technologies.

Use evolution, reinforcement learning, and random search to compare architectural search results. These experiments were performed on the CIFAR-10 dataset, in the same conditions as Zophet al., who used reinforcement learning for spatial searches.

The above graph compares evolution, reinforcement learning, and random search. On the left, each curve represents the progress of an experiment, indicating that evolution is faster than reinforcement learning in the early stages of search. This is important because the computing power is low and the experiment may have to stop early.

In addition, evolution is robust to changes in data sets or search spaces. In general, the goal of this comparative comparison is to provide the research community with computationally expensive experimental results. In doing so, we hope to promote everyone's architectural search by providing case studies of the relationships between different search algorithms. For example, the above image shows that the final model obtained by evolution can achieve very high precision when using fewer floating point operations.

An important feature of the evolutionary algorithm we use in the second paper is the form of regularization: instead of letting the worst neural network die, it removes the oldest one, no matter how good they are. This improves the robustness of the task changes being optimized and ultimately leads to a more accurate network. One of the reasons may be that we don't allow weight inheritance, and all networks must be trained from scratch. Therefore, this form of regularization still maintains a good network during retraining. In other words, because a model may be more accurate, noise during training means that even the same architecture may get different accuracy values. It is only in a few generations that an accurate architecture can survive for a long time and choose to retrain a good network. More details of the conjecture can be found in the paper.

The most advanced model we developed was named AmoebaNets and is one of the latest achievements of our AutoML efforts. All of these experiments performed a lot of calculations by using hundreds of GPUs/TPUs. Just as a modern computer can outperform machines thousands of years ago, we hope that these experiments will become households in the future. Here we aim to provide a wish for the future.

Semiconductor Fuse And Ferrite

Fuse refers to an electric appliance that, when the current exceeds the specified value, melts the fuse and disconnects the circuit with the heat generated by itself.When the current exceeds the specified value for a period of time, the fuse melts and disconnects the circuit with the heat generated by the fuse itself.A current protector made from this principle.The fuse is widely used in high and low voltage power distribution system and control system as well as power equipment.

Ferrite is a metal oxide with ferrous magnetism.As far as electrical properties are concerned, the resistivity of ferrite is much larger than that of single metal or alloy magnetic materials, and it has higher dielectric properties.Ferrite magnetic energy also shows high permeability at high frequencies.As a result, ferrite has become a non-metallic magnetic material widely used in the field of high frequency and weak current.Due to the low ferrite magnetic energy stored in the unit volume, saturated magnetic induction strength (Bs) and low (usually only pure iron 1/3 ~ 1/5), and thus limits its higher requirements in the low-frequency magnetic energy density in the field of high voltage and high power applications.

Semiconductor Fuse and Ferrite,Fuse Cutout, Protection Fuse, Square Fuse, Fuse Link, Ceramic Fuse

YANGZHOU POSITIONING TECH CO., LTD. , https://www.cnpositioning.com