In the past two years, the realization of real-time artificial intelligence on mobile has formed a wave of trends. Last year, Google launched TensorFlow Lite, a mobile computing and embedded neural network computing framework, to push this trend forward. How does TensorFlow Lite operate? This article will introduce TFLite's practical process for Document Recognition in Cloud Notes, and what are the features of TFLite for your reference.

In recent years, Youdao's technical team has done a lot of exploration and application work on real-time AI capabilities on the mobile side. After Google unveiled TensorFlow Lite (TFLlite) in November 2017, the technical team immediately followed the TFLite framework and quickly used it in the Cloud Road notes product.

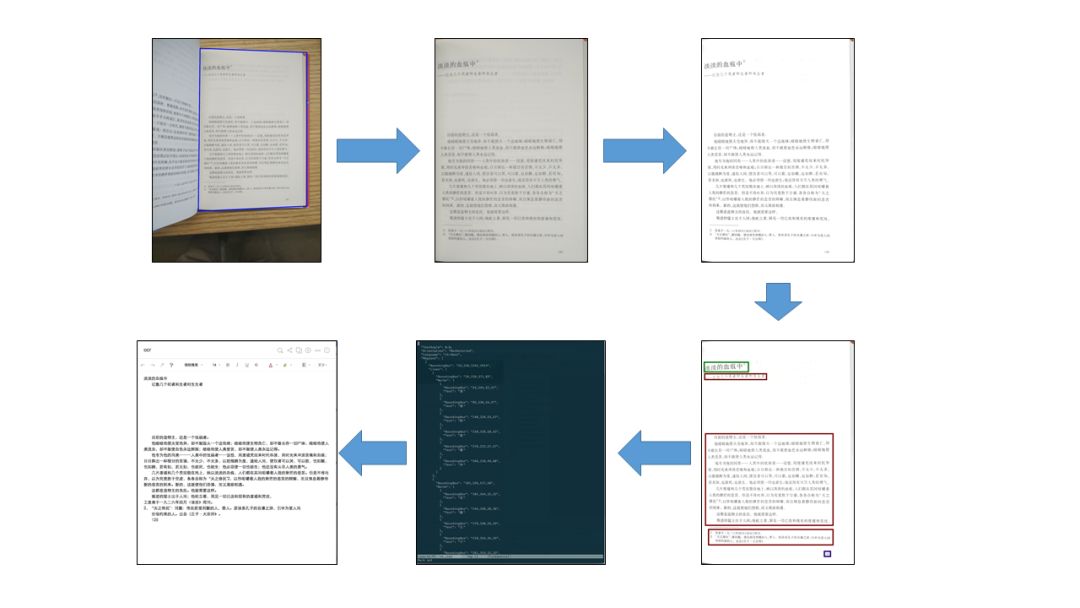

The following is the practical process of TFLite for document recognition in Cloudy notes.

Introduction to document identification

1. Definition of document identification

Document recognition was originally a problem faced when developing the document scanning function of cloud document. The document scanning function hopes to identify the area where the document is located in the photograph taken by the user, perform stretching (ratio reduction), identify the text therein, and finally obtain a clean image or a text version with a format. notes. The following steps are required to implement this feature:

Recognize the document area: Find the document from the background and determine the four corners of the document;

Stretch the document area and restore the aspect ratio: According to the perspective of the document, according to the principle of perspective, calculate the original aspect ratio of the document and restore the document area to a rectangle;

Color enhancement: According to the type of document, choose different color enhancement methods to make the color of the document picture clean and clean;

Layout recognition: Understand the layout of the document picture, find the text part of the document;

OCR: Recognizes "text" in the form of a picture as codeable text;

Generate notes: Generate formatted notes from the OCR results based on the layout of the document picture.

Document identification is the first step of the document scanning function, and it is also the most complicated part of the scene.

2. The role of document recognition in the AI ​​technical matrix

In recent years, in recent years, based on deep neural network algorithms, a series of work has been done on the processing and understanding of natural language, images, speech, and other media data, and multi-language translation, OCR (Optical Character Recognition), and speech based on neural networks have been produced. Identification and other technologies. With the combined efforts of these technologies, our products have the ability to allow users to record content in the most natural and comfortable way they can use, understand this content with technology, and translate it into text for further processing. From this point of view, our various technologies form a network structure that uses natural language as the center and various media forms to transform each other.

Document identification is an indissoluble but indispensable link from the transformation of images into text. With its existence, we can find exactly what documents need to be processed in the map and extract them for processing.

3. Introduction to Document Recognition Algorithm

Our document identification algorithm is based on FCNN (Fully Convolutional Neural Network), which is a special CNN (convolutional neural network). Its characteristic is that for each pixel of the input image, there is an output (relative, normal The CNN network is for each input picture corresponding to one output). Therefore, we can mark a batch of pictures that contain documents, mark the pixels near the edge of the document in the picture as positive samples, and other parts as subsamples. During training, the image is used as the input of the FCNN, and the output value is compared with the label value to obtain training punishment so as to perform training. For more details on document identification algorithms, see the document Scanning: Practice of Deep Neural Networks on the Mobile Side of the Daoist Technical Team.

Since the main body of the algorithm is CNN, the operators mainly used in the document scanning algorithm include operators commonly used in the CNN such as convolution layer, depth-wise convolution layer, full-connection layer, pooling layer, and Relu layer.

4. Document Recognition and TensorFlow

There are many frameworks that can train and deploy CNN models. We chose to use the TensorFlow framework based on the following considerations:

TensorFlow provides a comprehensive and large number of operators, and creating new operators yourself is not troublesome. In the early stages of algorithm development, various model network structures will need to be tried, and strange and odd operators will be used. At this point, a framework that provides full operator can save a lot of energy;

TensorFlow can better cover multiple platforms such as server, Android, and iOS, and has complete operator support on each platform.

TensorFlow is a more mainstream choice, which means that when difficulties are encountered, it is easier to find ready-made solutions on the Internet.

5. Why do you want to use TFLite in document recognition?

Prior to TFLite's release, Document Recognition in Cloud Micro was based on the mobile TensorFlow library (TensorFlow Mobile). When TFLite is released, we want to migrate to TFLite. The main driving force for our migration is the size of the link library.

After compression, the size of the TensorFlow dynamic library on Android is approximately 4.5M. If you want to meet multiple processor architectures on the Android platform, you may need to package 4 or so dynamic libraries, adding up to a volume of about 18M; and the tflite library's volume is about 600K, even if you pack a link library under 4 platforms. It only takes about 2.5M. This is a huge value for the mobile App on the ground.

TFLite introduction

1. What is TFLite

TFLite is a mobile network and embedded neural network computing framework launched by Google I/O 2017. The developer preview was released on November 5th, 2017. Compared to TensorFlow, it has some advantages:

Lightweight. As mentioned above, the link library generated by TFLite is very small;

Not much dependence. The compilation of TensorFlow Mobile relies on libraries such as protobuf, while tflite does not require large dependent libraries;

Can use mobile hardware acceleration. TFLite can perform hardware acceleration via the Android Neural Networks API (NNAPI). As long as the accelerated chip supports NNAPI, it can accelerate TFLite. However, on most Android phones, Tflite still runs on the CPU.

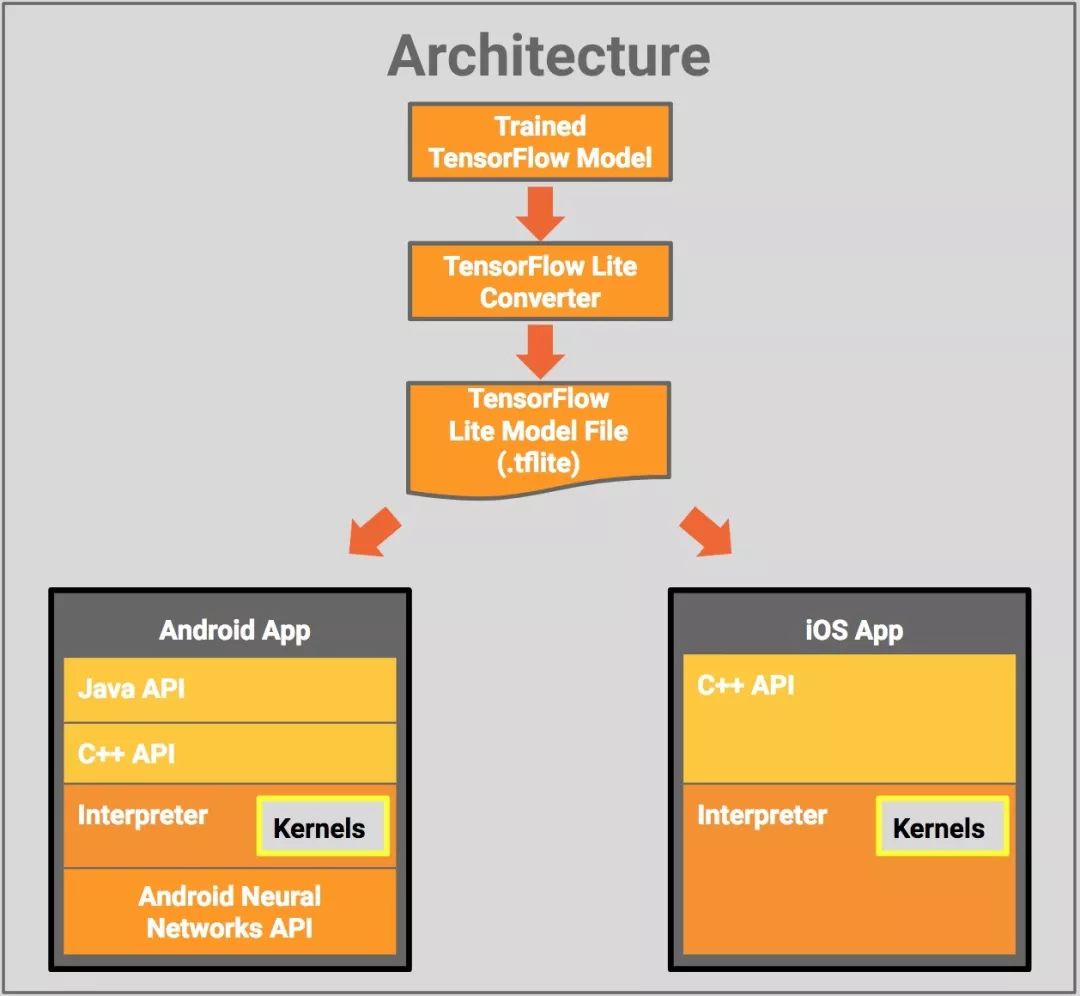

TensorFlow Lite Architecture Design

2. TFLite code structure

As a user of TFLite, we also explored the code structure of TFLite. Share it here.

Currently, the TFLite code is located under the "tensorflow/contrib/lite" folder in the TensorFlow project. There are several header/source files and some subfolders under the folder.

Among them, some of the more important header files are:

Model.h: Some classes and methods related to the model file. The class FlatBufferModel is used to read and store the model content, InterpreterBuilder can parse the model content;

Interpreter.h: provides class Interpreter for inference, which is the class we deal with most often;

Context.h: Provides a struct TfLiteContext that stores Tensors and some states. It is generally packaged in Interpreter when it is actually used;

In addition, there are some more important subfolders:

The kernels: operator is defined and implemented here. The regester.cc file defines which operators are supported and this is customizable.

Downloads: Some third-party libraries, which mainly include:

Abseil: Google's extension to the c++ standard library;

Eigen: a matrix operation library;

Farmhash: a library for hashing;

Flatbuffers: library of FlatBuffers model formats used by TFLite;

Gemmlowp: Google's open source low-precision matrix math library;

Neon_2_sse: Maps the neon instruction on arm to the corresponding sse instruction.

Java: mainly some code related to the Android platform;

Nnapi: Provides the invocation interface for nnapi. If you want to implement nnapi yourself, you can take a look;

Schema: the specific definition of the FlatBuffers model format used by TFLite;

Toco: The code for converting the protobuf model to the FlatBuffers model format.

How do we use TFLite?

1. TFLite compilation

TFLite can run on Android and iOS, and the official compilation process is given.

On Android, we can use the bazel build tool to compile. The installation and configuration of bazel tools will not be repeated. Students who have had experience in compiling TensorFlow should be familiar with it. According to the official documentation, bazel compiles the target as "//tensorflow/contrib/lite/java/demo/app/src/main:TfLiteCameraDemo", which is a demo app. If you only want to compile the library file, you can compile the "/ tensorflow/contrib/lite/java:tensorflowlite" target, and get the libtensorflowlite_jni.so library and the corresponding java layer interface.

See official documentation for more details:

Https://github.com/tensorflow/tensorflow/blob/master/tensorflow/docs_src/mobile/tflite/demo_android.md

On iOS, you need to use Makefile compilation. Running build_ios_universal_lib.sh on the mac platform will compile the tensorflow/contrib/lite/gen/lib/libtensorflow-lite.a library file. This is a fat library, packed with x86_64, i386, armv7, armv7s, arm64 libraries on these platforms.

See official documentation for more details:

Https://github.com/tensorflow/tensorflow/blob/master/tensorflow/docs_src/mobile/tflite/demo_ios.md

The call interface of the TFLite library on the two platforms is also different: Android provides a call interface for the Java layer, and iOS is the call interface for the C++ layer.

Of course, the project structure of TFLite is relatively simple. If you are familiar with the structure of TFLite, you can compile TFLite with the familiar compilation tools.

2. Model transformation

TFLite no longer uses the old protobuf format (perhaps to reduce dependencies) but instead uses FlatBuffers. So you need to convert the trained protobuf model file into FlatBuffers format.

TensorFlow Official gives guidance on model transformation. First of all, because TFLite supports fewer operators and does not support training related operators, it is necessary to remove unnecessary operators from the model in advance, ie the Freeze Graph; then the model format conversion can be done. The tool is tensorflow toco. These two tools are also compiled by bazel.

See official documentation for more details:

Https://github.com/tensorflow/tensorflow/blob/master/tensorflow/docs_src/mobile/tflite/devguide.md

Missing operator

TFLite currently only provides limited operators, mainly based on the operators used in CNN, such as convolution, pooling and so on. Our model is a full convolutional neural network. Most of the operators TFLite are provided, but the conv2d_transpose (inverse convolution) operator is not provided. Fortunately, this operator appears at the end of the network model, so we can take out the calculation results before reverse convolution, and use C++ to implement a reverse convolution to calculate the final result. Since the amount of reverse convolution is not large, the speed of operation is basically not affected.

What if, unfortunately, your model needs but the operator that TFLite lacks does not appear at the end of the network? You can customize a TFLite operator and register it in the TFLite kernels list so that the compiled TFLite library can handle the operator. At the same time, when the model is converted, the --allow_custom_ops option needs to be added to keep the operators that TFLite does not support by default.

See official documentation for more details:

Https://github.com/tensorflow/tensorflow/blob/master/tensorflow/contrib/lite/g3doc/custom_operators.md

TFLite advantages and disadvantages

Advantages: A balance between library size, ease of development, cross-platform, performance

As a comparison, Youdao’s technical team selected some other deep-learning frameworks for mobile terminals to analyze their performance in terms of “development convenience, cross-platform, library size, and performanceâ€.

TensorFlow Mobile, because it is the same code as TensorFlow on the server, you can directly use the model that is trained on the server. The development is very convenient; it supports Android, iOS, and cross-platform no problem; as mentioned before, the size of the library is compared. Big; performance mainstream.

Caffe2, can be easily converted from the caffe training model to caffe2, but the lack of some operators, the development of a general degree of convenience; can support Android, iOS, cross-platform no problem; library compiled relatively large, but is a static library can Compression; performance mainstream.

Mental/Accelerate, both of which are frameworks on iOS. Compared with the bottom layer, it requires model conversion & self-written inference code, development is more painful; only support iOS; library is the system comes with, does not involve the library size problem; very fast.

CoreML, this is the framework on iOS 11 released by WWDC17. There are some model conversion tools, which are not painful when only dealing with general operators, and are difficult to do when dealing with custom operators; only iOS 11 is supported; libraries are built into the system and do not involve library size issues; they are very fast. .

The last is TFLite:

TFLite, its model can be converted from the model trained by TensorFlow, but it lacks some operators, and it is easy to develop. It can support Android, iOS, and cross-platform. The library compiles very small; for our experiments, , faster than TensorFlow.

It can be seen that TensorFlow Mobile is easy to develop and universal, but it has a large link library and mainstream performance (mobile versions of other server-side neural network frameworks also have similar features); mental/Accelerate these relatively low-level libraries are very fast. But it can't be cross-platform and the development is painful. The neural network frameworks such as caffe2 and TFLite which are optimized for the mobile terminal are relatively balanced. Although there will be incomplete operator problems at first, as long as the team behind them continuously supports the development of the framework, This issue will be resolved in the future.

Advantages: Relatively easy to expand

Since the code of TFLite (relative to TensorFlow) is relatively simple and the structure is relatively easy to understand, it can be relatively easily expanded. If you want to add an operator that is not on the TFLite and TensorFlow, you can add a custom class; if you want to add an operator that is not on the TensorFlow, you can also modify the FlatBuffers model file directly.

Disadvantages: ops is not comprehensive

As mentioned earlier, TFLite currently supports CNN-related operators and has no good support for operators in other networks. Therefore, if you want to migrate the rnn model to mobile, TFLite is not OK at present.

However, according to the latest Google TensorFlow Developer Summit, the Google and TensorFlow communities are working hard to increase the coverage of ops, and I believe that with more developers' similar needs, more models will be well supported. This is one of the reasons why we chose a mainstream community like TensorFlow.

Disadvantages: Can not support various computing chips at present

Although TFLite is based on NNAPI, it is theoretically possible to use various computing chips. However, there are not many computing chips that support NNAPI. Looking forward to the future, TFLite can support more computing chips. After all, there is an upper limit to optimizing the running speed of the neural network on the CPU. The use of custom chips is the door to the new world.

to sum up

In the past two years, real-time artificial intelligence on the mobile side seems to have formed a wave of trends. The DaoTechnical team has also made many attempts in the research of mobile AI algorithms, and introduced offline real-time AI capabilities such as off-line neural network translation (offline NMT), off-line word recognition (offline OCR), and offline document scanning. Youdao Dictionary, Youdao Translation Officer, Youdao Cloud Notes for product application. As the current mobile AI is still in a vigorous development stage, various frameworks and computing platforms are still not perfect.

Here, we take the off-line document recognition function in Dao Cloud Notes as a practical case, and see TFLite as an excellent mobile AI framework that can help developers to implement common neural networks on the mobile side relatively easily. In the follow-up, we will also bring more technical teams together with TFLite's real-time AI technology exploration and actual product applications.

Buckle type insulation sleeve is mainly used for insulation protection pipe of bare wire.Buckle insulation sleeve has reliable performance and convenient installation.

Since it was put into the market, it has been widely used in the insulation protection of electric railway and power grid bare line.

The buckle type insulation bushing has the characteristics of safe and reliable electrical performance, convenient installation, no need for free end, no fire and so on.

In the insulation protection of electric bare line crossing trees, obstacles and strengthening insulation protection, etc.,

Gradually replace insulation bushing, composite insulation tape, waterproof tape, high and low voltage insulation tape.

Overhead Line Insulation Sleeve is used for railway contact network overhead line system, improve insulation performance to ensure the safe operation of railway line.

Protective Wire Sleeve can provide shielding for cable joints on solid dielectric insulated power cables. Replace Semi-conduction layer beneath metallic shield of similar cables in case of damage.

Polyolefin Clasps,Wire Fire Sleeve,Sleeving For Wiring,Electrical Wire Sleeving

CAS Applied Chemistry Materials Co.,Ltd. , https://www.casac1997.com