We clearly recognize that artificial intelligence (AI) is a science, machine learning (ML) is the most mainstream artificial intelligence implementation method, and deep learning (DL) is a branch of machine learning (ML), and it is also the most A popular type of machine learning (ML).

Deep learning is a very hot concept in the field of machine learning. After the media and the big V and other hype, this concept has become almost mythical. Let me slowly reveal the mystery of deep learning. ^_^

The concept of DeepLearning was proposed by Hinton et al. in 2006. Based on Deep Trusted Network (DBN), an unsupervised greedy layer-by-layer training algorithm is proposed to bring about the hope of solving the deep structure-related optimization problems. Then the deep structure of multi-layer automatic encoder is proposed. In addition, the convolutional neural network proposed by Lecun et al. is the first true multi-layer structure learning algorithm, which uses spatial relative relations to reduce the number of parameters to improve training performance.

So what exactly is deep learning?

Deep learning (DL) is a method based on the representation and learning of data in machine learning. It is a machine learning method that can simulate the neural structure of the human brain. The concept of deep learning stems from the study of artificial neural networks. The Artificial Neural Network (ANN) abstracts the human brain neural network from the perspective of information processing, establishes a simple model, and forms different networks according to different connection methods, which are referred to as neural networks or neural networks. Therefore, deep learning, also known as Deep Neural Networks (DNN), was developed from the previous artificial neural network ANN model.

Deep learning is a new field in machine learning research. Its motivation is to build and simulate a neural network for human brain analysis and learning. It mimics the mechanism of the human brain to interpret data such as images, sounds and texts. Deep learning can make computers have the same wisdom, and their development prospects must be unlimited.

Like the machine learning method, the deep machine learning method also has the distinction between supervised learning and unsupervised learning. The learning models established under different learning frameworks are very different. For example, ConvoluTIonal Neural Networks (CNNs) is a machine learning model under deep supervision and learning, and DeepBelief Nets (DBNs) is a machine learning model under unsupervised learning.

The techniques involved in deep learning are: linear algebra, probability and information theory, under-fitting, over-fitting, regularization, maximum likelihood estimation and Bayesian statistics, stochastic gradient descent, supervised learning and unsupervised learning, depth before Feed network, cost function and backpropagation, regularization, sparse coding and dropout, adaptive learning algorithm, convolutional neural network, cyclic neural network, recurrent neural network, deep neural network and deep stacking network, LSTM long and short memory, main Component analysis, regular autoencoder, characterization learning, Monte Carlo, constrained Bozman machine, deep confidence network, softmax regression, decision tree and clustering algorithm, KNN and SVM, generation of confrontation networks and directed generation networks, machines Visual and image recognition, natural language processing, speech recognition and machine translation, finite Markov, dynamic programming, gradient strategy algorithms, and enhanced learning (Q-learning).

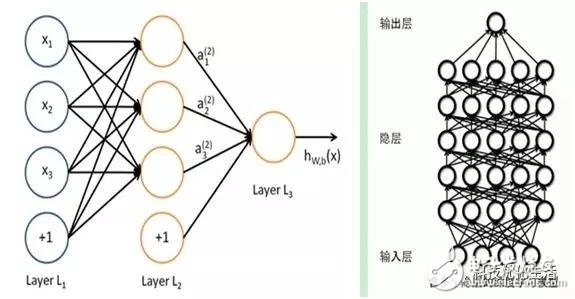

To discuss deep learning, you will definitely talk about the word "Depth" and "depth" is the number of layers. The calculation involved in generating an output from an input can be represented by a flow graph: a flow graph is a graph that can represent computations, in which each node represents a basic calculation and a computational The value, the result of the calculation is applied to the value of the child node of this node. Consider a computational set that can be allowed in every node and possible graph structure, and defines a family of functions. The input node has no parent node and the output node has no child nodes. A special property of this flow graph is depth: the length of the longest path from one input to one output.

A neural network with a depth of more than 8 layers is called deep learning. A multi-layer learning model with multiple hidden layers is the architecture of deep learning. Deep learning can form a more abstract high-level representation attribute category or feature by combining low-level features to discover distributed feature representations of data.

The "depth" of deep learning refers to the number of layers experienced from the "input layer" to the "output layer", that is, the number of layers of the "hidden layer". The more layers, the deeper the depth. So the more complex the choice problem, the more depth levels are needed. In addition to the number of layers, the number of "neurons" - yellow small circles per layer is also large. For example, AlphaGo's policy network is 13 layers, with 192 neurons per layer.

Deep learning can learn a deep nonlinear network structure, realize complex function approximation, characterize the distributed representation of input data, and demonstrate the powerful ability to learn the essential features of data sets from a small sample set. The benefit of multiple layers is that you can represent complex functions with fewer parameters.

The essence of deep learning is to learn more useful features by constructing machine learning models with many hidden layers and massive training data, and ultimately improve the accuracy of classification or prediction. Therefore, the "depth model" is the means, and the "feature learning" is the purpose. Deep learning emphasizes the depth of the model structure, highlights the importance of feature learning, and transforms the feature representation of the sample in the original space into a new feature space through layer-by-layer feature transformation, making classification or prediction easier. Compared with the method of constructing features by artificial rules, using big data to learn features is more capable of portraying rich intrinsic information of data.

Super High Voltage Film Capacitor

Polyester Film Capacitor,DC Filter Capacitor,Precision Capacitor

XIAN STATE IMPORT & EXPORT CORP. , https://www.shvcomponents.com