The two disciplines of deep learning and neuroscience are now very large, and it is always difficult to correctly interpret the connection between deep learning and neuroscience in the process of learning.

NeuronsIn the field of deep learning, neurons are the lowest unit. If you use the model of the perceptron, wx + b, plus an activation function to form all, the input and output are numbers, the research is clear, nothing else, With the parameters known, the input can be used to calculate the output, and the output can be used to calculate the input.

But in the field of neuroscience, neurons are not the lowest unit. For example, some people are doing the work related to ion channel ion channels. The input of a neuron can be divided into three parts, the electrical signal input from other neurons, the chemical signal input, and the signal encoded in the cell (excited, suppressive type, can be analogized to the activation function?), output It is also three, electrical output, chemical output, changing its own state (LTP long-term potentiation, LTD long-term inhibition).

Are we enough to understand neurons? I personally doubt this point. I saw a progress on neurons in the past few days. The general idea is that neurons can not only respond to a single signal, but also respond to signals at certain intervals. The underlying coding ability of neurons is actually stronger. Our neuroscience has developed for so long, and it may not even be true that neurons are really clear.

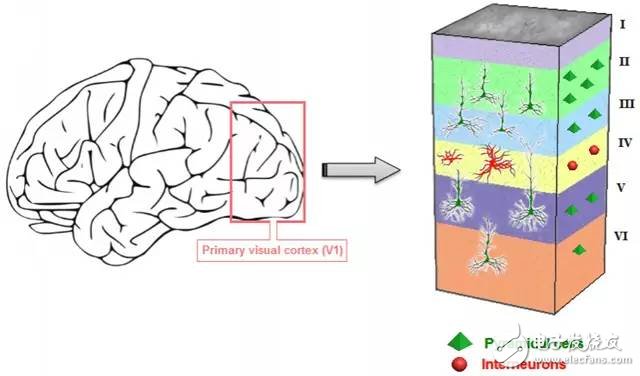

In addition, in the deep neural network, most of the nodes are equivalent, but in the human neural network, this is not the case. The morphology of neurons can vary greatly between different brain regions and even within the brain region. For example, the six layers inside V1 are based on the differentiation of neuronal morphology. From this perspective, the human nervous system is more complicated. I personally do not deny that each neuron can be replaced with a node with different initialization parameters, but for now, the complexity is still higher than the deep neural network.

Signal coding methodIn addition to the coding method, the neurons in neuroscience generate action potentials of 0-1, and the corresponding signals are encoded by the frequency of the action potentials (most of the brains are like this, there will be other forms in the periphery), and artificial nerves. The internet? Most of what we have heard and what we saw should not be coded in this way, but the pulse neural network does have this thing. (I saw a very interesting job when I went to the ASSC meeting today, and I will write it later.)

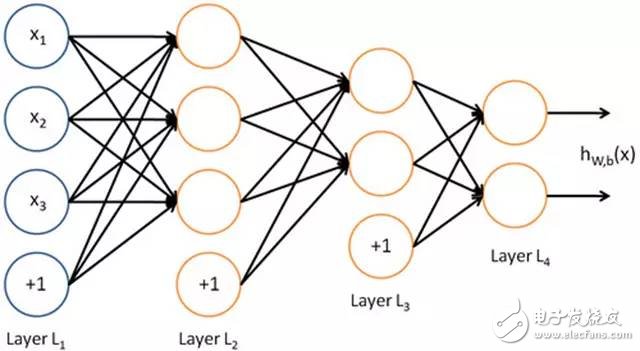

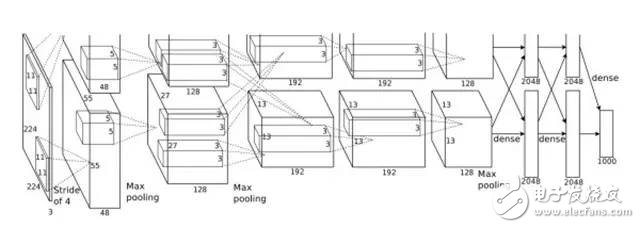

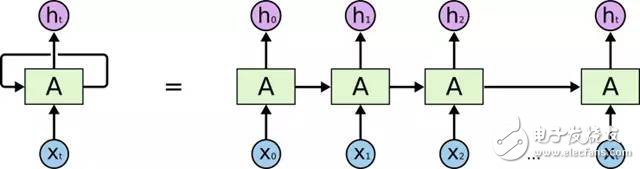

Neural network structureThe current deep neural networks are mainly three structures, DNN (fully connected), CNN (convolution), and RNN (loop). Something weird, like AttenTIon? Sorry, the article hasn't seen it yet, dare not talk about it...

Put the map:

DNN:

CNN:

RNN:

Take out the network structure in neuroscience, here is V1 as an example:

Different from what you think, the visual distinction between V1, V2, V3, V4, V5 (MT), FFA, and some brain areas that control more advanced functions. In each of the small visual cortexes, it is not purely connected by neurons, and there are still different hierarchical structures. Here go to google to find a picture, regardless of the specific article, mainly describes the fine structure and connection relationship of V1. The main function of V1 is to identify points and segments of different angles (Hubel and W work on cats in the 1950s), but in fact, V1 also has a certain perception of color.

If I compare at this level, my own understanding is that the human neural network is DNN+CNN + RNN plus pulse as the encoding method. The layer is more like DNN, and the layer is very similar to CNN. In time, the expansion is RNN.

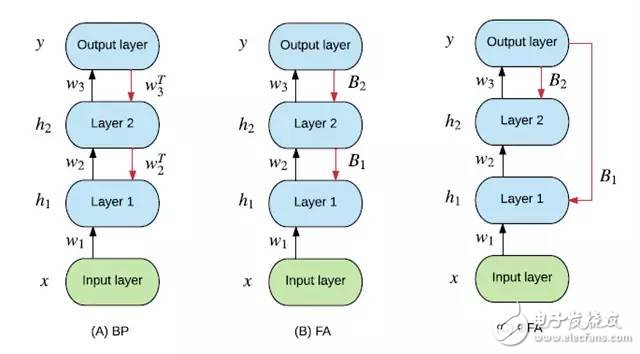

Training method:The training method of deep neural network is mainly back propagation, which is back-propagated from the output layer to the first layer, and each layer continuously corrects the errors. But there is no similar back-propagation mechanism in the brain. The simplest explanation is that the neuron signal transmission is directional and there is no chance to return the signal to the previous layer. For example, I have to pick up the cup at hand and visually notice that it is offset to the right. Then I will naturally move the whole arm to the left and try to grab the cup again. It seems that no one is letting the fingers, hands, and finally the arm moves toward the cup, and even many times can finally succeed. The figure in the next article is quoted here.

Our brain is more like the principle of the last DFA. Something went wrong, send the error to a place closer to the input, and then retrain.

Memory and forgetting:

When it comes to memory, here is mainly LSTM. The memory of LSTM is stored in the weight of each node, and there is a special forgetting gate to control the forgetting rate. These are stored in the form of numbers. In the nervous system, the storage of memory is stored by the formation and disappearance of synapses in some brain regions. In fact, they have a common point in that they are gradual during the training process. Thanks to the back-propagation mechanism and the biological nature of the nervous system, they can only change at a relatively slow speed during the training process and in the continuous learning process. From the perspective of learning rate, they are relatively similar. .

Then we forget it. Forgetting is controlled by the door in the LSTM. In the nervous system, I think it is related to STDP. It is based on the Hebb hypothesis, Fire Together, Wire Together, and the neurons of synchronous discharge tend to establish a stronger connection. . STDP extends this and considers the impact of the sequence of two neuron discharges.

Briefly, if the presynaptic neuron discharge precedes the postsynaptic neuron (the neuronal signaling is directional, from presynaptic to post-synaptic), the synapse enters an LTP long-term potentiation state. It will have a stronger response to signals from presynaptic. Conversely, if the presynaptic neurons are post-synaptic after discharge, they will enter a long-term inhibition state (indicating that they did not receive the same source signal, the signal is not correlated), and the response is weaker for a period of time.

The weight of the gate inside the deep neural network is also trained by back propagation, and there is also a gradual nature. When there is a certain lag for the fast-changing stimulus. From this perspective, the human nervous system is more flexible and can complete state switching in a short period of time.

I think this is probably the reason, because my own research is visual attention, and more is done on people, so it is not particularly familiar with the middle loop level research. Going up, talking about the work of the human cerebral cortex, the individual feels that it is very limited. For most brain regions, we don't know how they work, but can match different brain regions with different functions ( Not necessarily accurate). Talking about their similarities and differences in this perspective is not very responsible and is easy to be beaten.

Summary of viewsSo many high-level lives on earth have similar underlying network structures, and one of them has developed such a great civilization. The structure of neural networks has at least proved itself to be an effective form. But is it the global optimal solution of this form of intelligence? I am personally skeptical.

The neural network is an effective structure, so everyone will use this structure to make some good results, I must not be surprised. But if you talk about simulation, you try to rely on this direction. At this point, I personally are not very optimistic about this way. We learn to use vocal positioning for bats, and the development of sonar is far superior to bats in terms of distance and effect. The reason we can surpass bats is that our technology is extensible. When the underlying principles are common, solving engineering and mechanical problems, we can not be so easy but also detect thousands or even tens of kilometers. The second reason is that we need and the bats don't need to, they sleep in the cave every day. It is necessary to detect the distance of several tens of kilometers, and it is impossible to find it.

In fact, the human brain is also very similar, and the brain is a product of evolution. It is constantly shaped by the environment, why people have not evolved the computing power of computers, because they do not need it. But in fact, there are certain common places in the brain. Some things in the brain, we don't need it. We have been hunger for thousands of years to develop the demand for fat intake, and the demand for sugar intake in childhood. . If we say this, we are also going to the brain for the same thing, isn’t it better to take the essence?

What I mentioned above is an ideal situation. We have a thorough understanding of the brain, knowing which one to remove and which to leave. . But now, you may have to take a simulation.

Probably this is the point. To sum up, there is a common ground between the deep neural network and the cerebral cortex, but it is not a simulation. Only everyone finds the same idea of ​​understanding the problem.

Fuel System,Fuel Injection System,Car Fuel System,Engine Fuel System

Chongqing LDJM Engine Parts Center , https://www.ckcummins.com